How I Actually Keep My Workflows Documented Without Losing My Mind

1. Google Docs is still the fastest way to capture workflow changes

If I’m mid-rewire and something weird happens — like a webhook payload shape quietly changing — I slam open Google Docs and write it like I’m live-tweeting: “Zap step fails if ‘status’ = array not string” or “Airtable webhook payload now has only 100 records per page”. It’s not pretty, but it’s searchable later. I used to rely on Notion, but syncing lag there once made me lose about twenty minutes of debugging notes. Docs autosaving just works.

Key thing: I don’t use formatting. Just raw text dump with hard returns between separate zaps or triggers. Even pasted snippets like this:

{

"status": ["active"] // This broke it

}Later I’ll usually paste that into a better tracker (Obsidian, if it’s for me; Notion, if team-based), but the initial capture always happens here. It’s fast, forgiving, and open already in a tab half the time.

If you try to be too organized mid-debug or mid-build, you lose the thread. Trust your ability to sort chaos later.

2. Workspaces rarely match real data access and that ruins logs

Someone on the team updated a Make scenario at 11:30pm and failed to realize it triggered with my outdated Airtable API token from weeks ago. The error didn’t propagate until three steps later, when a webhook fired again — recursively. The scenario ran 14 times silently before anyone noticed the Slack bot melting down.

Here’s the issue: Make doesn’t always force auth renewal when you duplicate org workspaces. You think you’re operating in a sandbox, but it’ll re-use your last login or token silently. Unless you explicitly log out of every connected service and re-authenticate, it may default to whatever was cached client-side.

One fix: in shared Make orgs, we now require naming every connection by WHO it’s tied to (e.g., airtable_admin_nate), not just the platform. It’s dumb that this isn’t standard UX, but once it’s a habit, you start catching these clashes early — especially when logging why something failed after being touched by “someone at midnight”.

This bug costs hours. Don’t try to fix the payload until you validate the tokens actually belong to the right human.

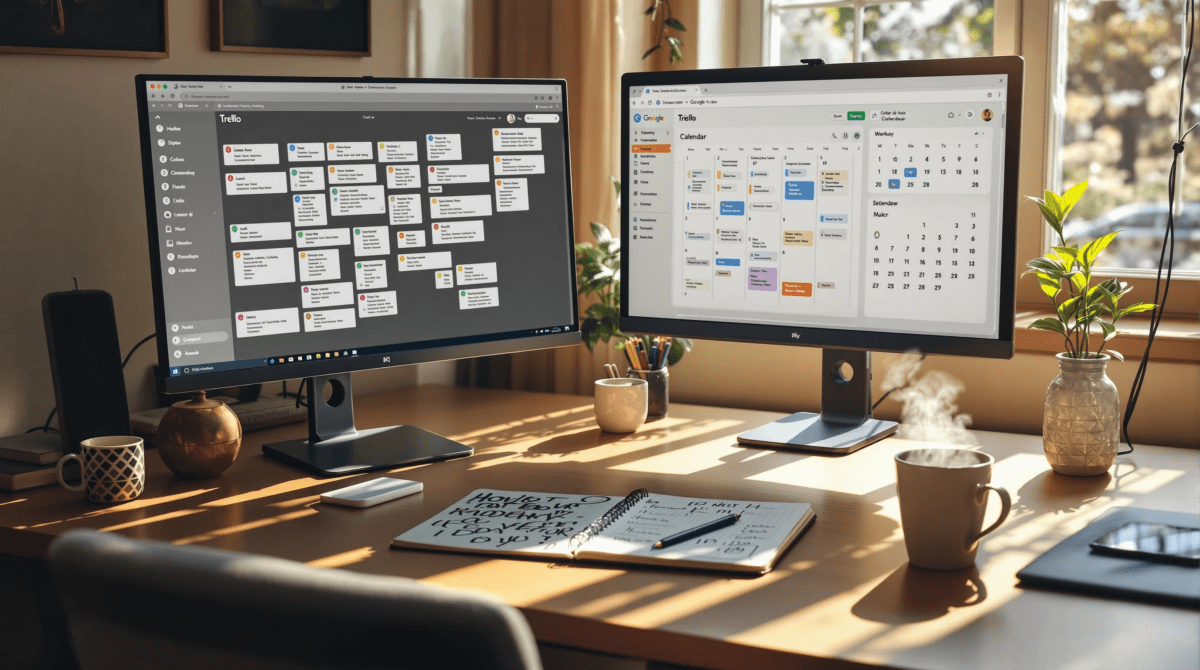

3. Broken context switching kills automation documentation every time

I had six tabs open: one in Notion to describe the Zapier flow, one in Zapier to edit the workflow, and one for OpenAI to tweak the GPT call in a code step. Somewhere in that mess, I forgot to update the Notion doc after modifying which field mapped into the prompt. Later, someone copied the flow from Notion, and the AI output made no sense — because it was referencing the wrong field.

This happens constantly. You think you’ll circle back. You won’t. There’s no nudge in Zapier to say “edit this description?” after changing a field, and Notion has no way of knowing Zapier changed.

What helped slightly: embedding zap links as full auto-generated URLs (the ones with the ID) directly in inline Notion text. Then I built a tiny helper widget in Coda that cross-referenced Notion rows with actual Zap names and status via the Zapier platform API.

Yes, it’s duct-taped. But until tools actually cross-update each other, context-switch documentation will always lag.

4. You only notice architecture flaws when something gets deleted

If a team member loses access or deletes a shared Airtable base, every automation you built on it blows up silently unless you account for fallback logic. The worst failure mode is when the webhook still fires but has no content — so the scenario runs, but downstream everything is null.

It took me too long to catch that one because no step was actually throwing an error. Make just processed the empty data and kept going like everything was fine. This kind of silent failure is the absolute worst for long-running automations where the input isn’t obviously wrong.

Quick conditional structure that saved me:

{

"run": "only_if",

"condition": "data.length > 0"

}It’s not always JSON — that’s just how I built it into one scripting module — but the concept applies. Check early. Bail if they deleted the input source. The key is to document which dependencies ALLOW deletion and which ones absolutely must persist. In Notion, the checklist I made was titled “If deleted, what breaks?”

The answer is: usually more than you’d expect.

5. Template duplication always breaks custom filters and output paths

I used a Make scenario as a template to copy a GPT extraction tool we use across clients. It parses form entries, slides them through OpenAI, and maps structured results into a Monday board. Works fine in original. The copied version? Broke on every single run with no error message.

Turns out: the output variables in the copied version retained the old IDs but pointed to stale modules. A single filter was referencing a text field that didn’t exist in the new form — Make didn’t throw a hard error, just silently ran it with undefined data.

- Always verify filters when duplicating

- Don’t assume output IDs carry over

- Use inline test values before trusting any mapping

- Document scenarios with a working test record ID

- Use color-coded emojis before module steps if several are similar (e.g. 🔵 for active, 🔴 for temp copies)

- Double-check scenarios that passed validation but had 0 length returned arrays — those slipped past obvious checks

You can’t trust Make’s visual clarity without inspection — and documentation doesn’t save you if someone copies your scenario and never rechecks the filters.

6. Your prompt engineering is meaningless if field transforms change

I had this great working prompt in a Zap code step — turning a string of customer feedback into classification tags that output to Airtable. Then someone upstream changed a transform in the formatter to truncate text after 280 characters.

No one mentioned it, of course. And now, the AI response started leaning generic and missing edge cases. I burned half a day tweaking the OpenAI model settings. Turns out, I was feeding it clipped garbage.

The fix? I built a hidden log table in Airtable that stores both the raw input to the prompt and the AI response, timestamped, for every run. It adds log bloat, but now I can see when inputs shift before I waste time rewriting prompt logic.

Also: write the actual field name being used in the prompt inside the prompt. Like: “Use ‘description_long_raw’ as input.” It’s self-documenting. It sounds dumb, but when someone renames a field, you can spot it from the broken language alone.

7. Naming conventions solve nothing but avoid total chaos

One week I had three different automations named “Google Form Intake” — one in Zapier, one in Make, and one in n8n — all built by different people, all slightly different. None labeled with the client name, list target, or output structure. Just vibes.

We now include a short prefix (3-letter team code), the destination (intake-form → slack-notify), and the date-last-updated in hover text (saved as a comment in Zapier or a Notion table). It’s not a fix — people still mislabel things — but it makes it *possible* to trace or document flows without brute-force clicking every tab. Which I still do sometimes.

There’s no universal naming system that will survive reorgs and surprises, but if your docs reflect names nobody actually uses in the platform, you’ve lost before you start. Use the ugly internal names. Screenshot them if you have to. Build your documentation around what shows up on screen during a panic.

8. The only stable documentation lives closest to the trigger point

This sounds obvious but took too long to sink in: anything that lives externally — Google Drive folders, Confluence wikis, Airtable documentation tables — will fall out of sync unless it’s tied as close as possible to where the automation fires.

Now I use inline comments on Zap steps, scenario annotation in Make, or hidden fields in Airtable forms. They’re not pretty, but they’re visible during chaos. When you’re debugging something that fired wrong at midnight, you won’t open the wiki. You’ll look at the trigger step.

Hidden Airtable fields with comments are a favorite. I include a field called automation_context_log that’s not shown in views, but contains notes like: “Field A source = ‘Form B’ — only fires if Type = buyer.” I edit these inline, right after filing a bug. If someone else is in the base, they see it immediately. No tabs, no context switch.

It’s not central. It’s not scalable. But documentation that’s 70 percent accurate and visible *in-app* beats the slick Notion page no one updated in two weeks.