Prompt-Based Affiliate Content Automation That Mostly Works

1. Using product databases to seed reliable prompt workflows

Some of the prompt chains I’ve built for affiliate posts start with Airtable, Notion, or Coda holding hundreds of SKUs. Usually these come from a partner feed or scraped data (hello, ImportJSON sheets on life support). Getting consistent inputs out of these tables is the only way the downstream prompts don’t drift into hallucinated model numbers or twenty identical air purifiers.

Here’s one thing that broke me: Notion tables with rich text properties can randomly pass line breaks into Make and Zapier while previewing fine. You’d think it’s a basic copy-paste, but the API turns “UL-listed” into “UL- listed” mid-stream. If your prompt says, “only suggest products that are UL-listed,” the generated snippet skips it entirely because the term splits. It took staring at a ChatGPT token stream inside an OpenAI playground window to catch it.

After wiping and retrying the same data through Airtable, then through CSV import, I landed on a pre-cleaning step. A Make scenario that reformats, flattens, and roughly sanity-checks all text fields before they ever hit a prompt. Call it a buffer zone for API weirdness.

2. Prompt structures for SEO-focused feature comparisons

Affiliate content isn’t just “here’s a product” — it’s “here’s which one is best for your kitchen countertop height during the summer in Georgia.” I’ve found that ChatGPT and Claude respond better with prompts that frame each comparison as a task, not a judgment.

Compare these 3 air purifiers for filtering wildfire smoke in small apartments.

Use facts only, no marketer language.

Highlight differences in CADR, noise level, and filter replacement cost.

This template consistently gives me usable base copy for affiliate spec tables. But the problem comes when your source data is incomplete. Like when “filter replacement” is null — the model now makes a guess. And those guesses will absolutely say things like “most models require filter changes every six months,” which no one at Amazon confirmed. If this gets cached in Google Search snippets, guess who gets affiliate clickthroughs revoked for misinformation?

My workaround: add a condition before every call that checks if all three comparison fields exist. If anything’s missing, the whole prompt skips that product. You lose variety, but avoid the fear of hallucinated recommendations.

3. What happens when OpenAI function calls work too well

So obviously we wanted to get fancy and let GPT trigger real-time price lookups via function calling on an internal endpoint. You type: “Compare the current price of these 5 models,” and it fetches JSON from a Cloudflare Worker. At least that was the theory.

Here’s what actually happened: GPT chains two calls faster than our auth cache warmed up, the second blows past the rate limit, and suddenly three affiliate articles have broken shortcodes reading: [price not available]. Nobody touched anything. It just… failed open, silently.

This stuff never shows up in logs unless you’re watching stdout during the failure. I caught it once because my UptimeRobot ping got angry about a missing CORS header. Turned out: Cloudflare silently strips headers when bots hit too many origins inside five seconds. We had to stagger the whole function routing with 300ms random delays and cache every price once per hour.

“If GPT function calling looks elegant, you haven’t stress-tested it on affiliate flows.” — me, angrily at 1am

4. Avoiding hallucinated features in AI-written product blurbs

I hate that this still happens: you pass a model some real data points, then it confidently claims a totally unrelated feature because “vacuum robots usually have that.” Even with GPT-4 and Anthropic, if the prompt says too much, it starts filling gaps with statistically likely buyer bait.

Here’s a trick that helped: force the model into citation mode. Something like:

Write a summary of this product.

Only mention features listed directly in the data provided.

After each feature, append (SOURCE) as in:

“Includes HEPA filter (SOURCE)”

This seems dumb, but it flags when the model invents things. If the output has a feature with no (SOURCE) tag, I delete it. It’s a visual indicator during review, and reviewers on my team now scan for missing tags faster than reading for accuracy. Does it break flow? Yep. But it cuts down post-publish edits by a lot.

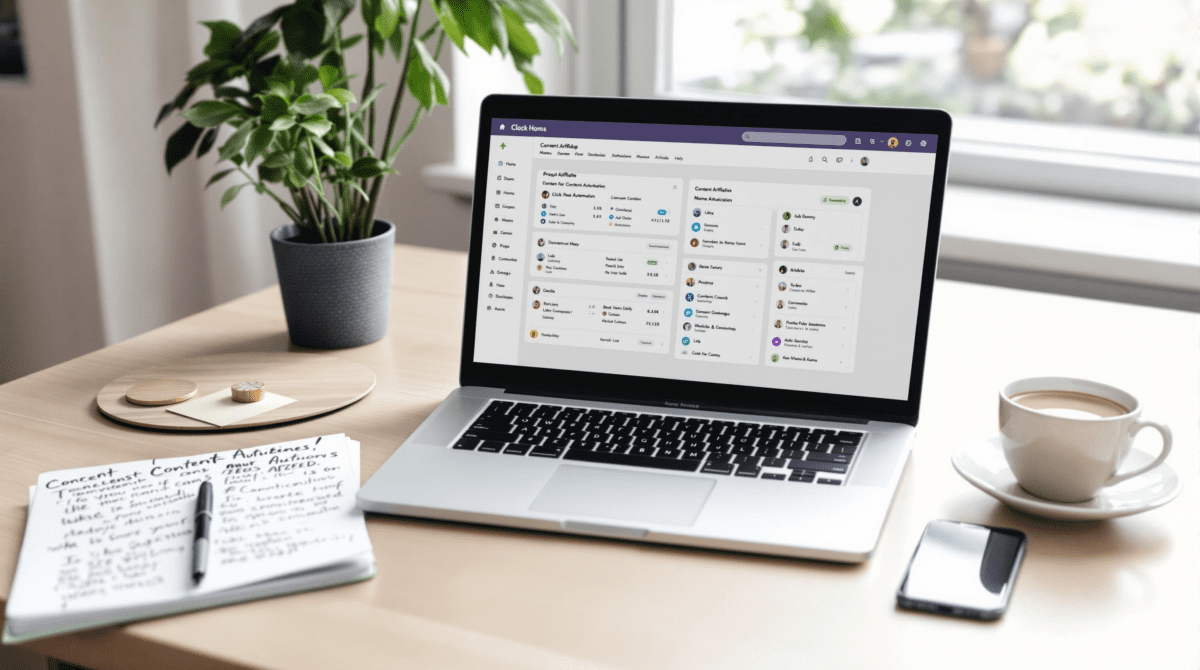

5. Organizing prompt templates inside Airtable or Notion fails fast

You’d think keeping prompt versions organized inside a table would be the cleanest way to collaborate. I tried it across multiple builds — Airtable’s rich text, Notion comments, even syncing Markdown into Obsidian via API. Every single time, the context drifted by the second week.

Notion introduced invisible characters when copying out of code blocks. Airtable rows got orphaned when someone bulk-edited tags. Copy-pasting into Zapier’s OpenAI action deleted line breaks with no undo. I finally gave up and now store all current prompt templates as plain text blocks in Coda, synced weekly via n8n with a small checksum field. If the hash changes, I get pinged so I can diff prompt A vs B.

Tips for not losing your prompt scaffold sanity

- Wrap every prompt in triple quotation marks before saving to text fields

- Add a version tag manually (like v1.4–gamma) for later comparison

- Store sample input-output pairs alongside every prompt

- Avoid Notion’s toggle blocks — they destroy line breaks on export

- Log every single prompt update with initials and timestamp

- Never allow inline editing in live views — always comment-and-clone

No single tool handles this well. But if your team can’t tell which version of a prompt wrote a product description, fixing SEO misfires becomes impossible.

6. How prompt tokens affect layout length in auto-generated content

This one hurt unexpectedly when our generated content exceeded character limits in our CMS’s meta description field. We were trimming to around 150 characters, thinking GPT counted them the same. False. Tokens are not characters. The word “super-resolution” is one token. So is the word “éclair.” But “home gym adapter cable” is five. And those split badly across constrained zones.

Inside a Zap, even trimming by length using Formatter won’t respect token length. So your neat meta description like “Find the best home gym adapters for portable use” will end mid-token and get cut off as “Find the best home gym adap…” which barely means anything.

We now pre-calculate token count using OpenAI’s tiktoken package running on a small webhook. Every snippet passes through it before trimming. If it exceeds our limit (around 50–54 tokens for a meta), we shrink it based on token tolerances, not just characters. Slightly overengineered, but bought back hours in SEO audits.

7. Edge case where Zapier expects JSON but payload is HTML

This is small but infuriating. Our flow pulls in user reviews from a partner API formatted in HTML blocks — because it includes rating stars with images. You pass this into a prompt that summarizes user sentiment. Then pass the result to a Zapier Webhook to post back into a database. Zapier sees the field labeled “content_json” and tries to parse it… as JSON. But it’s raw HTML. Silent failure again. No error, no payload, no retry.

The only clue was a blank cell on the Airtable end. We fixed it by wrapping the <div>-wrapped review text in a dummy JSON, like this:

{"htmlString": "<div>4 stars! Love this blender.</div>"}Even so, Zapier still occasionally failed if a single quote wasn’t escaped inside the HTML. This is the kind of bug that costs nothing in staging and nukes five entries live without error logging. I now have a dummy Slack zap that pings me every time a key webhook skips execution. It’s noisy, but better than silence.

8. Using Claude for longer comparisons without triggering repetition

I found Claude 2 handles long-form product summaries better than GPT-4 — especially if you’re asking for feature sets across ten or more similar items. The trick is resisting the urge to encourage “helpfulness” in your prompt. That just guarantees lines like “This model is a great choice for those looking for reliability” fourteen times in a row.

Instead, prompt only for raw lists. Not descriptions, not recommendations. Just: “List stated noise levels for each product in decibels.” Then craft the higher-level descriptions in another prompt layer. Two-step flows reduce the repetition risk.

One “aha” moment came when I noticed Claude repeating unique phrases across non-duplicate content. Not exact wording, but the rhythm. Like “Overall, this model performs admirably with little noise interference.” Same cadence, five rephrasings. Once I cut the prompt to under 300 words input at each stage, the output finally started diverging again.